How a cache actually decides what stays in and what gets kicked out. You only have a finite amount of memory in your caching layer. So, if you can’t fit everything into your cache, you’re going to have to make some decisions about what stays in the cache and what results in a database hit. That’s what we call a cache miss. So, if it’s not actually being stored in your cache currently, that’s going to flow right through to the database and repopulate the cache, maybe with that information on a cache miss.

Eviction Policies

Probably the most common policy is called an LRU (Least Recently Used) cache, or at least recently used algorithm for the eviction policy. The idea is that we evict the least recently used item from the cache. So, we keep track of which most recently used items are in the cache. As we request something from the cache, that basically goes to the front of the list, and as we request something else from the cache, that goes to the front-end list in front of the other item. And all the time, the least frequently used, the item that we haven’t looked at in the most amount of time is going to be at the end of our list. So, if we run out of space, that’s where we’re going to start kicking stuff out of the cache to make room for other items.

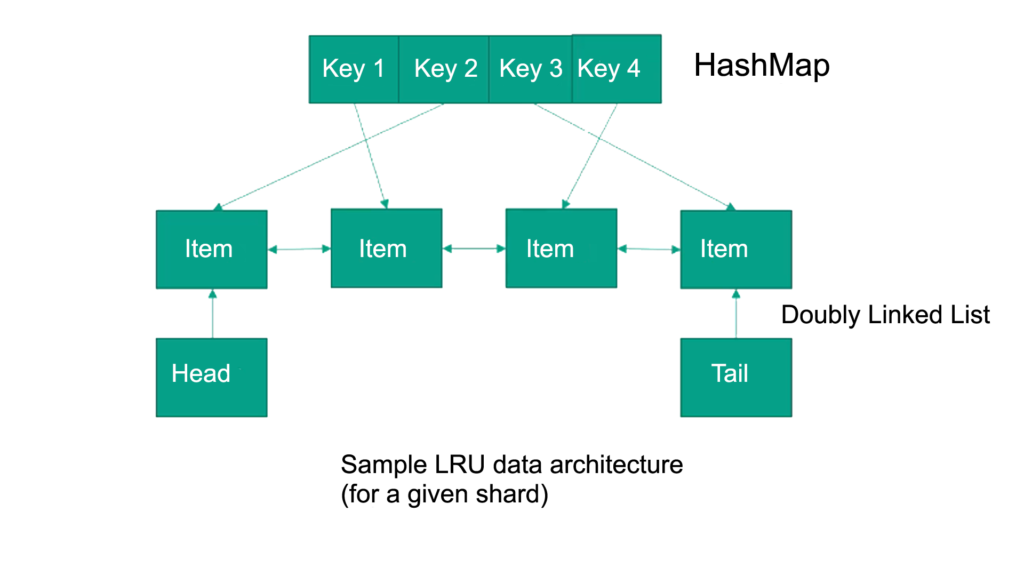

So pretty simple concept, the least recently used eviction policy means the oldest item that I’ve looked at is going to be kicked out if I need space in my cache or LRU for short. And over on the right here, we have sort of a sample data architecture for how that might work.

One way of doing that is by maintaining a HashMap for all our keys and all of those keys point to where that value is stored within the cache. So maybe key one points to item slot number two, and key two points to item slot number one, and so on. But the key is that as I access these items, I’m going to be moving those items around in a doubly linked list. So, as I access something, I’m going to move it to the head. So, let’s say that I just access key number two in this example, that got rearranged in my doubly linked list to the front of my list. That means that I know that this is the most recently used item.

And by doing that over time, the least recently used item would just bubble down to the end automatically. And I might actually keep a separate pointer to that as part of this process where I can quickly point to where the tail is. So as a new item gets pushed to the end, I’m going to update the tail pointer to that. And if that item does get accessed to move to the front, I’ll update the tail to point to the new tail.

So, I’m not going to get into the code of how you might do that, but that’s algorithmically the idea there. I’ve just had this linked list where I’m always moving the most recently accessed item to the front and the least recently accessed item to the end, and I have a head pointer and a tail pointer that allows me to quickly reference either the head or the tail of that list. So, if I need to evict something, I just look at where the tail pointer is, dispose of that item, get rid of its memory, and move the tail item to whatever it was before it. If I access something, I’m going to move it to the front. And that’s where the head pointer will come in handy for quickly adding items to the front of the list.

Other Eviction Strategies

A couple of other ways to do it. And one is LFU (Least Frequently Used). So, this is where we actually keep track of how often a given key is hit over some period of time. Here you can see how that might be a little bit more predictive of what might make sense to actually keep in the cache or not. From a practical standpoint, LRU usually works well enough at scale assuming you have a large enough cache, but if you have a small one, maybe LFU would be the way to go if you need to be a little bit more picky about what stays and what goes.

And finally, there’s FIFO (First In, First Out). Again, it just sounds like what it is. So, the first item that I put into my cache is going to be the first item that gets evicted later.

So, in summary we can have:

- Least Recently Used (LRU)

- How It Works:

- LRU removes the least recently accessed items first.

- Accessed items are moved to the front of a doubly linked list.

- The least recently accessed item is at the end of the list, and the most recently accessed item is at the front.

- Implementation Details:

- A HashMap maps keys to their corresponding positions in the cache.

- A doubly linked list maintains the order of access.Head Pointer: Quickly references the most recently used item.

- Tail Pointer: References the least recently used item for eviction.

- How It Works:

- Least Frequently Used (LFU)

- How It Works:

- LFU tracks how often each key is accessed over a period.

- Less frequently accessed items are evicted.

- When to Use:

- LFU is suitable for smaller caches where fine-grained control over eviction is necessary.

- How It Works:

- First In, First Out (FIFO)

- How It Works:

- The first item added to the cache is the first to be removed.

- Use Case:

- FIFO is simple but may not always align with access patterns.

- How It Works:

Popular Caching Technologies

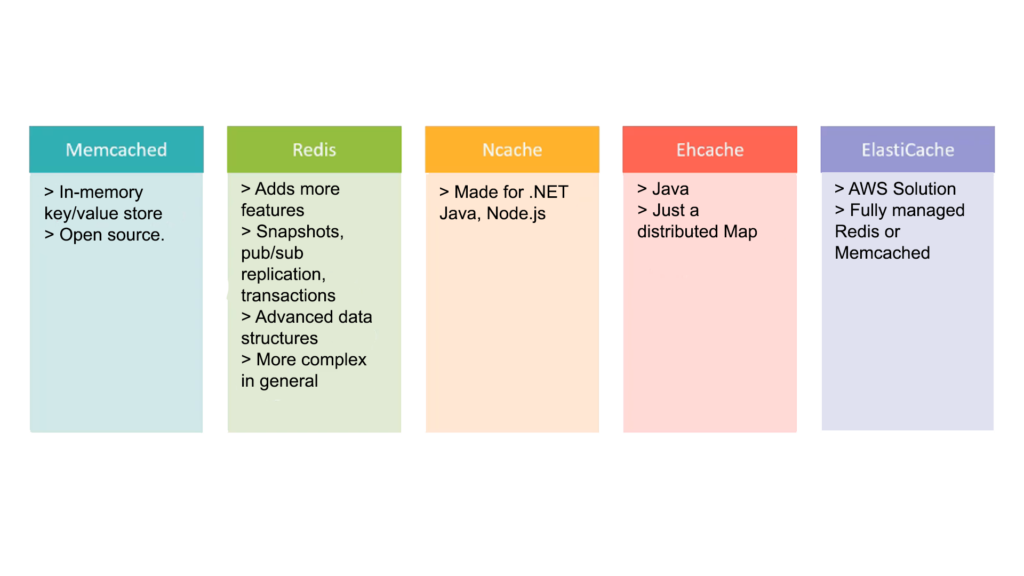

There are a few specific caching technologies. There are two that are kind of the big boys, Memcached and Redis. Redis seems to be the most popular one these days. Memcached is a very simple in-memory key value store. And its API is super simple. You just say, I want to retrieve the value for this key, and if it’s in the cache, give it to me. If not, well, I’ll go get it somewhere else.

Redis is really kind of winning the battle these days. It does have more features, also very battle-hardened, everyone uses it. So, you know it’s going to work, but it has a lot of cool features like snapshots and the ability to replicate and support transactions and also what we call pub/sub. So, I can just publish data from my cache and have subscribers that subscribe to changes in that data automatically. So, it gives you a lot more flexibility at the application of how you use this cache and how you access the data within it. It’s not just a simple key value store. It can also handle advanced data structures. And in general, it is more complex. But again, it is so battle-hardened and so widely used, you can pretty much count on it working reliably.

Other Caching Solutions

A few other ones are Ncache, which is specifically made for the .NET world, also it supports Java and Node.js. There’s also Ehcache, which is a Java caching technology. It’s just a distributed map in Java. So, if you’re writing an application in Java, that might be an option for you.

And there’s also cloud-based caching services as well. One example is ElastiCache from Amazon web services, AWS, where you can just have Amazon cache for you. So, if you’re building a system within AWS, ElastiCache might be your choice because those servers will be within the same data center as your other application components. So, if you’re using DynamoDB, you might put ElastiCache on top of that in front of your application running on Lambda or EC2. So, in the world of AWS, ElastiCache might be what you want. It is basically a fully managed Redis or a Memcached implementation you can choose whichever you want to use. And by fully managed, I mean that you don’t have to worry about actually running the servers and maintaining them. So that’s always a plus. If you want somebody else to run your caching fleet for you, ElastiCache might be the way to go. But again, it is probably only going to be really efficient if you’re using AWS for your entire application stack.

So, in summary we can have the following points:

- Memcached

- Description

- A simple in-memory key-value store.

- Open source, lightweight, and reliable.

- Features

- Minimalist API: Retrieve values by key.

- Proven track record with long-term reliability.

- Advantages

- Best for applications requiring simplicity.

- Description

- Redis

- Description

- A versatile in-memory data structure store.

- Supports advanced features beyond simple key-value storage.

- Features

- Snapshots, replication, transactions, and pub/sub.

- Can handle complex data structures.

- Advantages

- Widely used and highly reliable.

- Offers flexibility in application design.

- Description

- Ncache

- Description

- Specifically designed for .NET applications.

- Also support Java and Node.js.

- Use Cases

- Suitable for .NET environments.

- Description

- Ehcache

- Description

- A Java-based distributed caching solution.

- Use Case

- Ideal for Java applications requiring simple caching.

- Description

- AWS ElastiCache

- Description

- Fully managed caching service from Amazon Web Services.

- Supports both Redis and Memcached.

- Advantages

- Seamless integration with AWS ecosystem.

- Eliminates the need to manage servers.

- Description